GitHub Actions: A Cloudy Day for Security - Part 1

Binary Security spend a lot of time testing and securing CI/CD setups, especially GitHub Actions. In this two-part series we cover some of the many security considerations when using GitHub Actions, with a focus on securing your CI/CD pipeline against adversaries with contributor access to your GitHub repository. We also look at securely integrating GitHub Actions with Azure using OIDC.

This post is focused purely on GitHub Actions. For details on integrating GitHub Actions with Azure, see part 2.

If you prefer consuming your content in video form, you can view my presentation from NDC Security 2025 here that covers many of the same things as this post.

Outline

- What are GitHub Actions?

- GitHub Action Security

- A Slight Detour: Script Injection

- Branch Protections

- GitHub Action Secrets

- Environments

- Tags

- Summary: GitHub-only Setup

- Conclusion

What are GitHub Actions?

GitHub Actions are the GitHub way to do CI/CD. According to their own documentation, they let you build, test and deploy code right from GitHub. It basically lets you automate and streamline any task you want to do on GitHub, whether that be building, running tests, deploying, ensuring the correct review process, triaging new issues or something else.

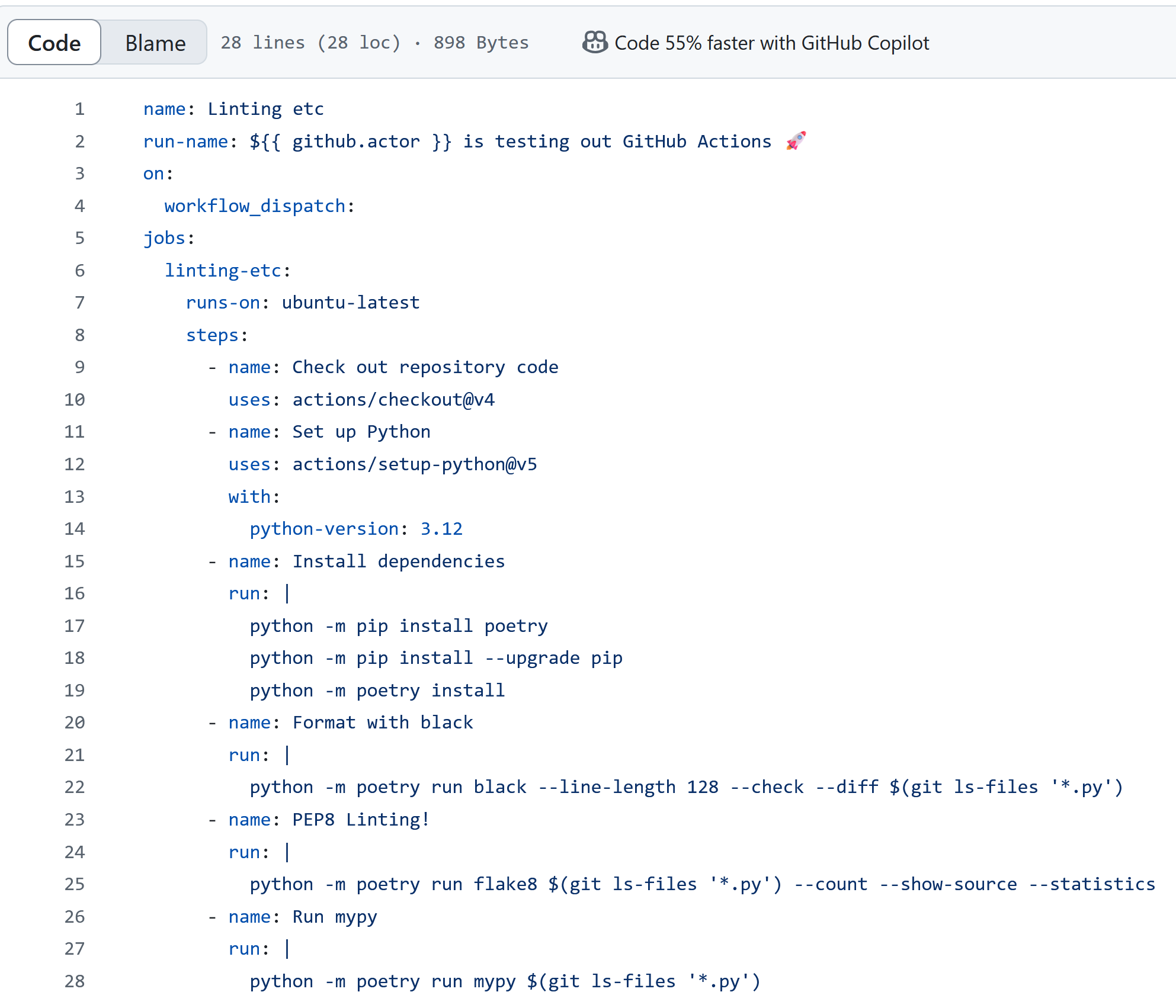

In practice, GitHub actions happen via workflows. A workflow is a YAML file living in a GitHub repository, typically under .github/workflows, which follows a specific syntax. For example, the following workflow attempts to do some checks on a Python codebase:

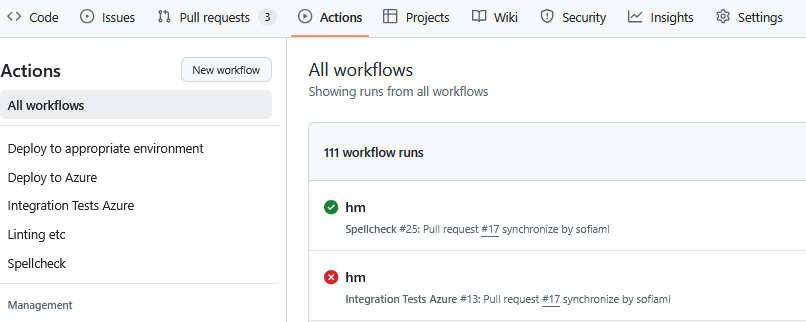

On the GitHub view of a repository there is an “Actions” tab where you can view all that repository’s workflows, workflow runs and details about a specific run:

GitHub Action Security

The workflows are responsible for potentially sensitive operations like building artifacts for production and deploying to different environments. As a part of this, the workflows may be handling credentials or access tokens, and have access to cloud environments or to publish packages. We therefore need to know how to properly secure a sensitive workflow.

If we focus on the security of the workflows in a specific repository, there are two main attack scenarios of interest:

- An attacker with read permissions to the repository (e.g. a random person on the internet viewing a public repository)

- An attacker with write permissions to the repository

In our experience, there are lots of good resources on the former case, but not so much for the latter.

Why would you need to secure your workflows against people with write access? Surely the people with write access are the developers who are supposed to be contributing to your repository in the first place?

As a first step in securing your GitHub Actions, make sure that this is in fact the case. This means that if your organization has reached a certain size, the default should probably not be that any new employee in your company automatically is granted write access to your whole GitHub organization. This both helps reduce the blast zone of a compromised user account, but also helps to protect against attacks where an attacker has managed to fake a company account which then has been added to your GitHub organization. Taking this a step further, even if I’m a developer working on product A, I probably don’t need write access to repositories for the completely unrelated project B.

Okay, so the only people with write permissions to your repository are the developers who interact with it. Unfortunately there is still the risk of an insider threat or a compromised developer account. The aim of this post is to configure things in such a way that that no single account can take over or otherwise negatively impact your production environment and production secrets. This is often referred to as the four-eyes principle.

A Slight Detour: Script Injection

Even if this post is mainly about attackers with collaborator access, there is one very common attack vector that can sometimes be exploited with just read permission, that will come up later.

GitHub Workflow Contexts and Expressions

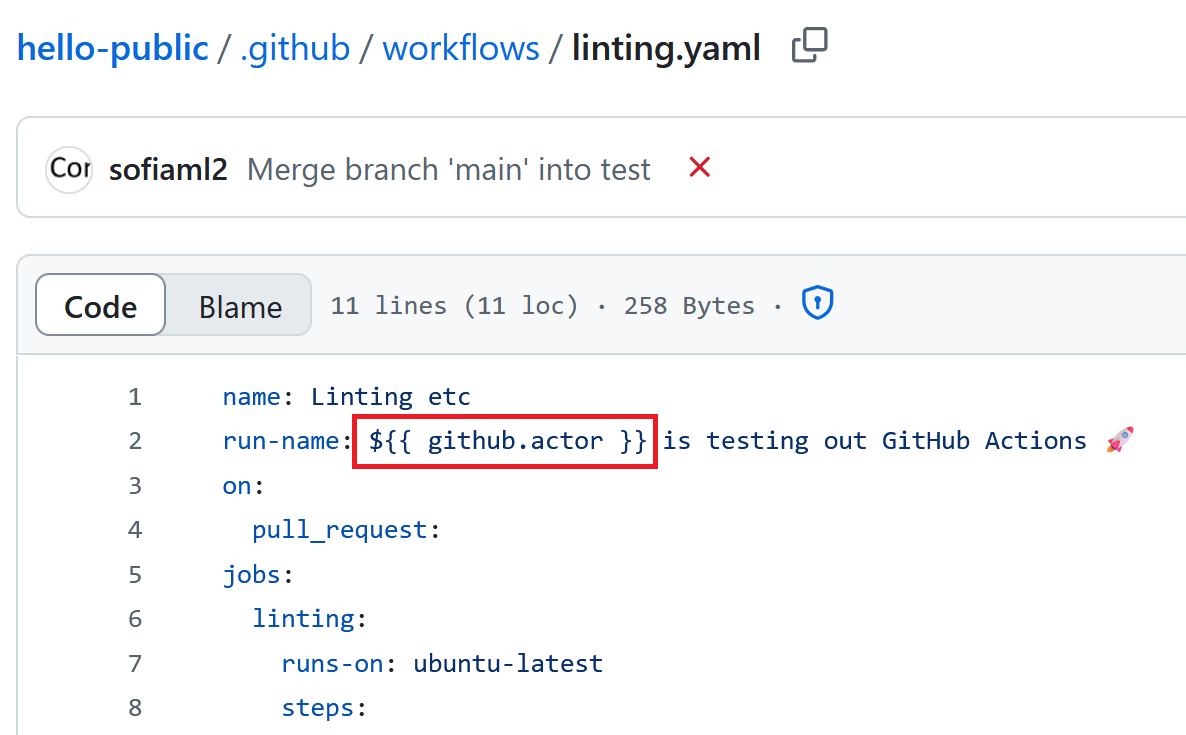

If you’ve looked at any workflows, you have probably seen syntax like ${{ github.actor }}. This ${{...}} syntax is known as an expression, and it allows you to programmatically evaluate a combination of literals, contexts and functions. Contexts let you get things like workflow metadata, variables and secrets, runner metadata and environment variables.

So let’s say I want the title of my action run to include the name of the person that initiated the run. I can achieve this with the following workflow:

name: Linting etc

run-name: ${{ github.actor }} is testing out GitHub Actions 🚀

on:

pull_request:

jobs:

linting:

runs-on: ubuntu-latest

steps:

- name: Check out repository code

uses: actions/checkout@v4

<...and so on...>

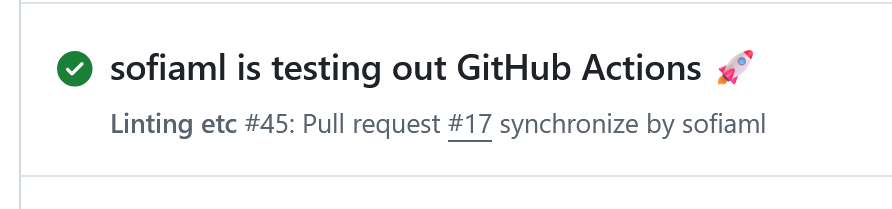

When I run this workflow, the

When I run this workflow, the ${{ github.actor }} expression is replaced by my GitHub username:

Script Injection: an Example

The thing about these contexts is that GitHub evaluates them before any steps are actually run. Consider the following workflow:

name: New Issue Created

on:

issues:

types: [opened]

jobs:

deploy:

runs-on: ubuntu-latest

permissions:

issues: write # Required to modify issues

steps:

- name: New issue

run: |

echo "New issue ${{ github.event.issue.title }} created"

- name: Add "new" label to issue

uses: actions-ecosystem/action-add-labels@v1

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

labels: new

This workflow triggers whenever a new issue is opened in the repository. It then prints the issue title, and adds the new tag to the newly opened issue.

Now, someone with read permissions to the repository comes to create an issue with the title $(id):

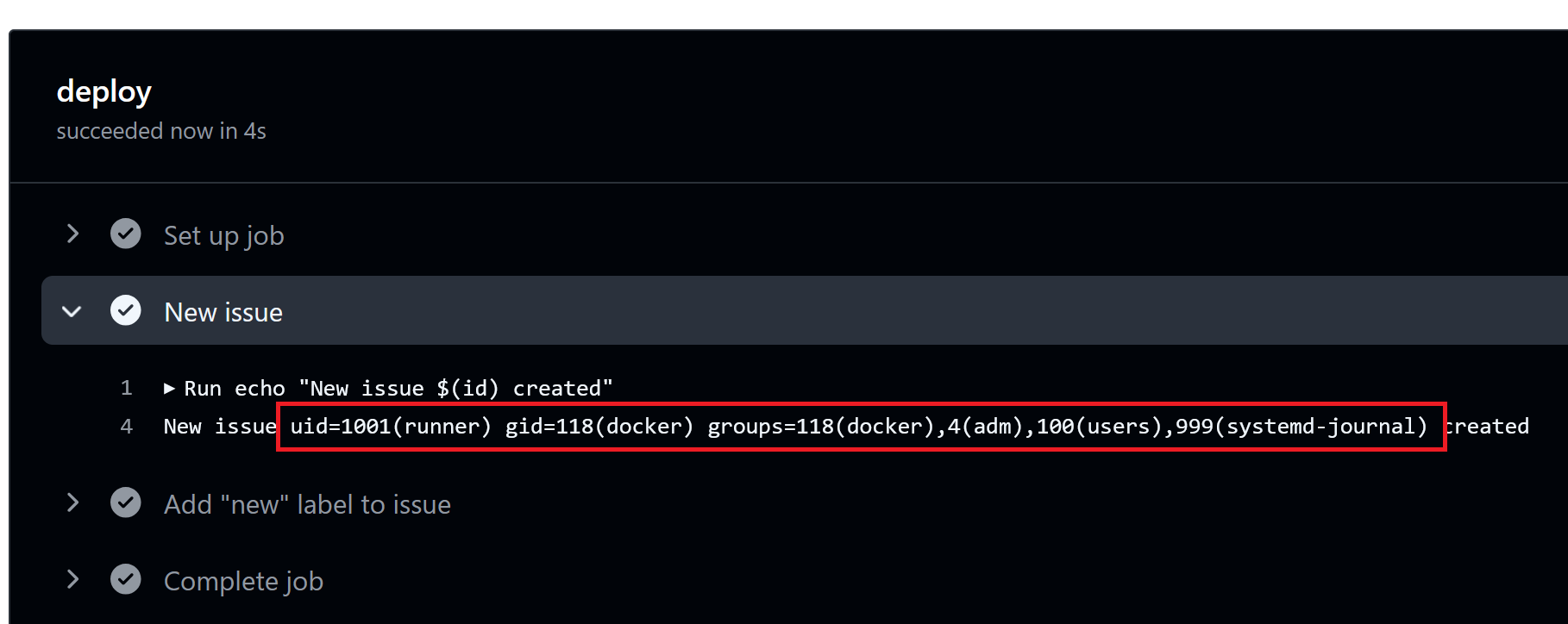

Creating the issue triggers the workflow:

Perhaps surprisingly, the run prints:

New issue uid=1001(runner) gid=118(docker) groups=118(docker),4(adm),100(users),999(systemd-journal) created

What has happened here is that GitHub has first evaluated ${{ github.event.issue.title }} to be $(id), and then performed the run step, which now has the code echo "new issue $(id) created". This of course results in the Linux id command being executed, which is what we’re seeing in the run output.

Be aware that no amount of tweaking of the Linux command will fix this injection vulnerability, as we can just inject whatever closing quotes or conditions we need to execute our code.

The proper defense is to use the env keyword. This sets up environment variables which then are accessible from within the workflow steps. For our vulnerable issue workflow, the fixed version looks like this:

<...>

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: New issue

env:

TITLE: ${{ github.event.issue.title }}

run: |

echo "New issue $TITLE created"

<...>

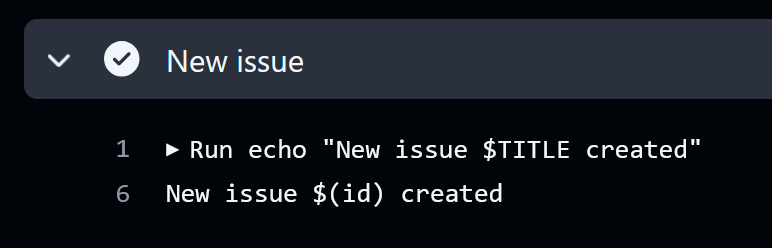

The command that is now run, is exactly what you expect:

Note that you have to access the env variable in Linux for this to work. If you instead setup the env as above and then use {{env.TITLE}}, then you’re back to script injection.

Am I Safe?

The best thing you can do to protect against script injection is to never use a GitHub context directly in a run command, and always first put them in an env variable. This costs you nothing (maybe a few lines of code), and guarantees that you are not vulnerable to this particular attack.

There are of course still cases where you may want to know which GitHub workflow contexts are vulnerable. The first thing to know is which triggers (the things following the on: keyword in a workflow file) allow an account with read permissions to trigger a run. There are some triggers where it’s pretty clear cut that someone with read permissions can trigger the run, as described in the following table.

| Trigger name | How to trigger | Restrictions |

|---|---|---|

discussion |

Start a new discussion | There is an organization setting to disallow readers from starting new discussions, but readers are allowed by default |

discussion_comment |

Comment on a discussion | |

fork |

Fork a repository | For private repositories, permission to fork repositories must have been granted at the organization level. This is disabled by default. Anyone can fork a public repository. |

issue_comment |

Comment on an issue or a pull request | |

issues |

Create an issue | |

pull_request |

Create a pull request | If the pull request is from a fork, permissions may be needed for actions to run. NB! Readers can create a pull request without forking as long as there are at least two differing branches already present. |

pull_request_review |

Review a pull request | |

pull_request_review_comment |

Make a comment as part of a pull request review | |

pull_request_target |

Create a pull request | Same as for pull_request |

watch |

Star a repository |

It is tricky to try to enumerate all cases where a run can be triggered by someone with read permissions, as one can set up arbitrarily complicated triggers if one really wants to. For example, say there is a workflow with a check_run trigger. This is triggered by a third party GitHub app interacting with a check run. But what causes the GitHub app to do this? Who knows!

And then there are triggers which are triggered by other workflows, namely workflow_run and workflow_call. For them we need to check what workflows trigger the target workflow, and then look at their permissions.

The next thing to know is which context values the reader has control over. There are a huge number of values set, many of them nested and dependent on what type of event caused the workflow to trigger. GitHub have made a list of context data that is likely to be used for injection attacks, but this will need to be investigated on a case-by-case basis.

Branch Protections

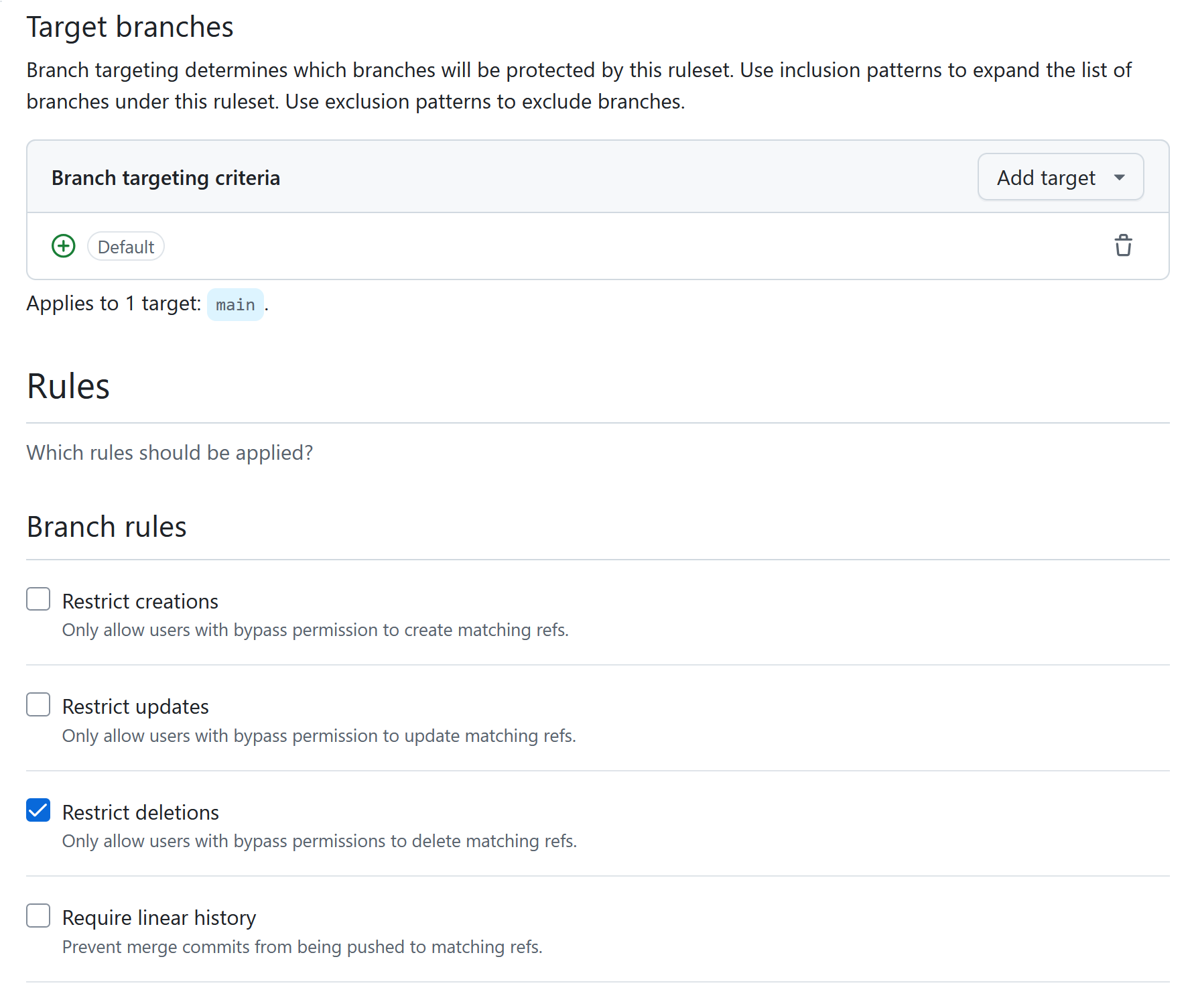

With that out of the way, let’s get back to attack vectors that require contributor access. Consider a hypothetical repository where any changes to the main branch are automatically deployed to production. Does that not mean that any contributor can deploy directly to production by pushing to main? Luckily not, thanks to the concept of branch protections. These can be configured under repository settings by a user with admin permissions:

The way this works is you choose which branch(es) your rules apply to, and then there are a bunch of different protections you can enable, outlined below.

Restrict creations/updates/deletions: All of these restrict the specified action to only users with bypass permissions. Bypass permissions are added separately. This obviously has no effect unless the set of users with bypass permissions is more restrictive than the set of users with contributor access to begin with.

Require linear history: This option is not directly security relevant.

Require merge queue: This option is not directly security relevant.

Require deployments to succeed: TL;DR: not a good security control.

In the GitHub world, a successful deployment to an environment just means that a workflow running in that environment has completed successfully. If this doesn’t make sense, you may want to read about environments below and then jump back here afterwards. The way this option works is you specify one or more environments, and then a commit cannot make it into the protected branch unless a workflow has run successfully off that commit in each of the specified environments.

Jumping ahead of ourselves for a moment, if there are no restrictions on the environment, this control is pointless as a security measure, as a malicious actor may simply modify an existing workflow to run in the specified environment (and to always pass).

As we’ll see, the main way to protect an environment is by tying it to a protected branch, in which case we’ve now made a circular dependency of security controls, where the target branch is only as protected as the environment, which in turn is only as protected as the branch it is tied to. If security of the original branch is the goal, I would not go this route.

The other way to protect an environment is by adding required reviewers. Technically, if configured properly such that a user cannot approve their own deployments, this is a way to achieve the four-eyes principle. In my opinion this is a highly sketchy way to achieve this. Let’s assume our main branch has this protection, and requires deployment to the env environment. One can deploy to the env environment from any branch, but it requires an approval. A malicious actor with contributor access pushes some bad code to a test branch, and requests to deploy the test branch to the env environment. Is the person who approves the deployment supposed to review all code on the test branch? I probably wouldn’t. My expectation is that code which should be reviewed goes via a pull request, which coincidentally also is the proper way to protect a branch, as we’ll see below.

Require signed commits: This is an important security feature, but it is not directly applicable for the four-eyes principle and so will not be discussed here.

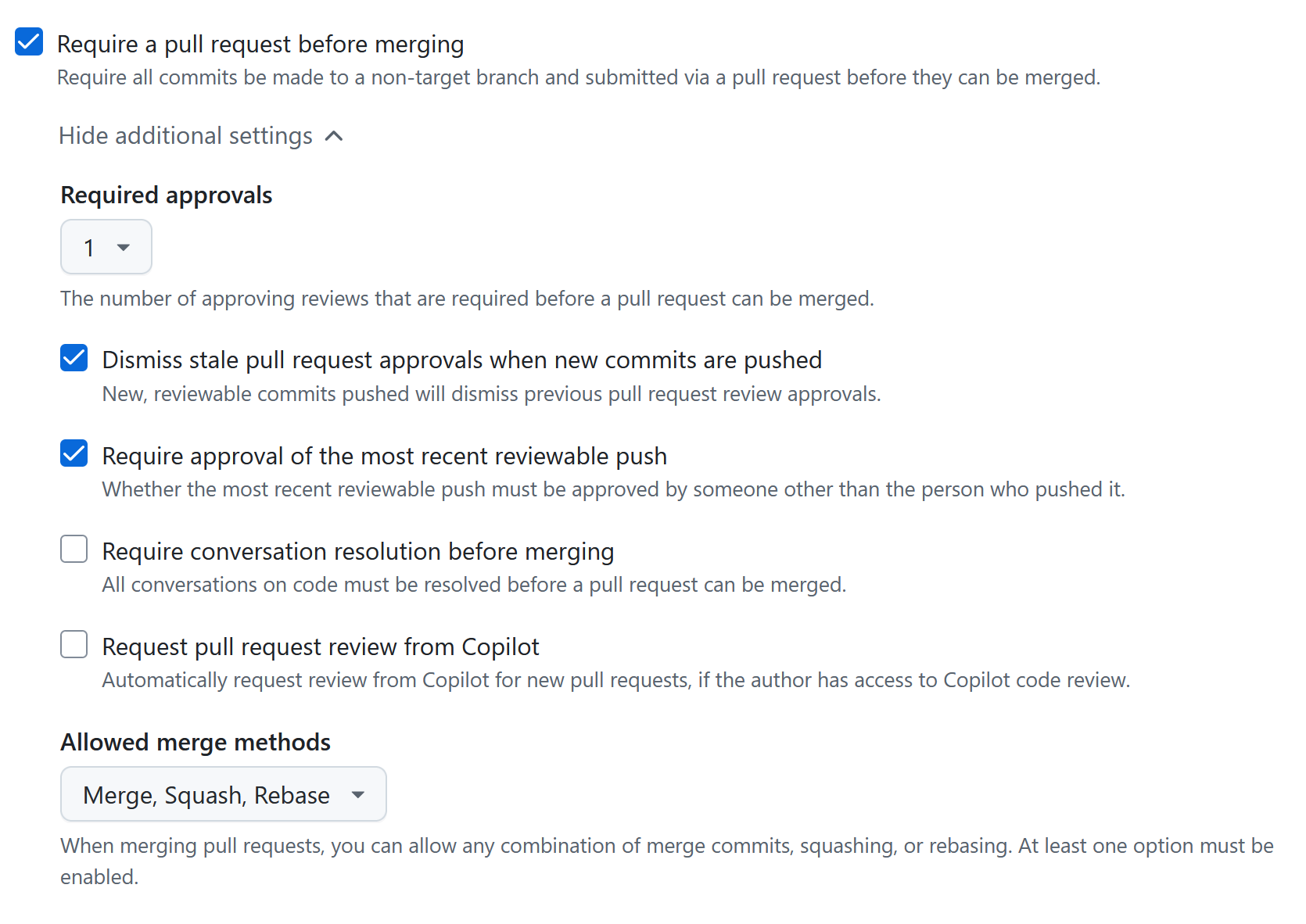

Require a pull request before merging: This is probably the most important protection rule, from a security point-of-view. Any commits to the protected branch(es) must go via a pull request. This prevents the scenario of a malicious contributor pushing directly to a production branch. Once this option is selected, there are a bunch of extra settings you can enable:

First, the number of required approvals should be set to 1 or greater. For extra sensitive branches you may want to consider setting this to 2 or more. Note that GitHub by default prevents self-review, meaning that I cannot open a pull request and then immediately approve it from the same account.

It could however be possible to make a perfectly legitimate pull request, get it approved, and then push your malicious code before merging. The option “Dismiss stale pull request approvals when commits are pushed” ensures that no changes have been made after approval. Even worse, if this setting is not enabled, I could find someone else’s approved pull request, push my malicious code to their branch and then merge it.

Actually, even if the above option is enabled, I can still find someone else’s open pull request, push some malicious code, approve it myself and then merge it. This works as I’m not the author of the pull request, and GitHub then does not count it as self review. Enabling the option “Require approval of the most recent reviewable push” forces the most recent push to be approved by someone else than the author of the push, thus stopping this attack.

The remaining sub-options when requiring a pull request are not security relevant.

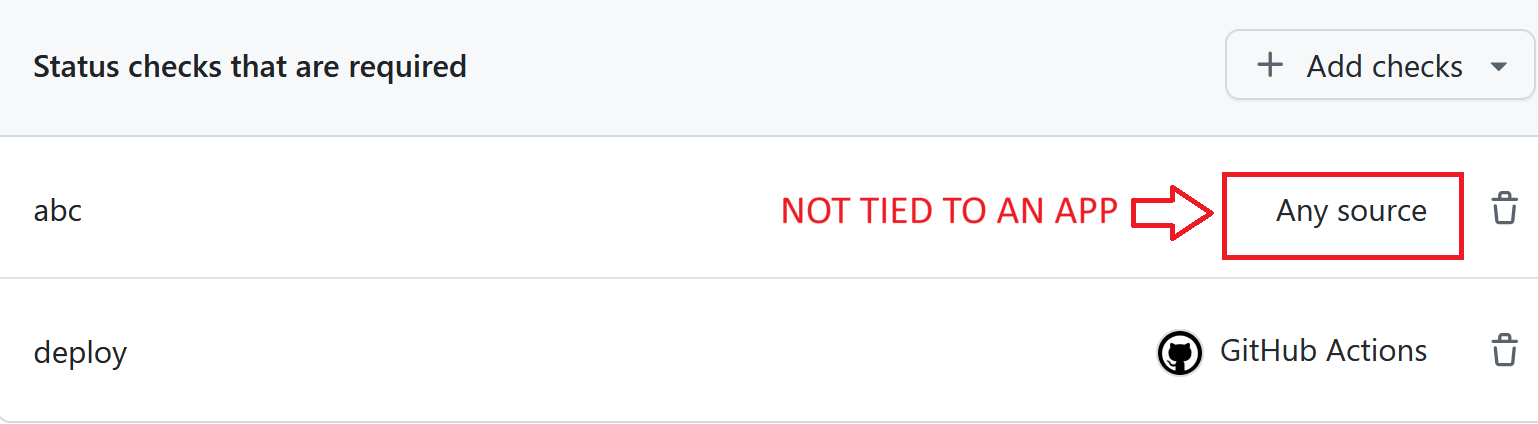

Require status checks to pass: TLDR: not a good security control (with a possible exception if you really know what you’re doing).

When enabled, you select one or more checks that must succeed before the pull request can be merged. A check is tied to a particular commit or tag, and crucially has a name, a status (e.g. completed) and a conclusion (e.g. success). Checks can be interacted with directly from the GitHub API. You can’t do this directly though, it must either be done from a workflow or from a GitHub app.

Enforcing that a check tied to a workflow must pass, is trivially bypassable as a collaborator, as they can simply modify the workflow in question to always pass.

Enforcing that a specific GitHub app’s check must succeed is fragile at best, and non-functioning at worst. Many bots have built in ways to skip their checks, e.g. by commenting @bot-name skip or similar on a pull request. I would not advise using this as your only security measure, but I guess it is technically possible to build a GitHub app that performs a check in such a way that it can’t be bypassed by a single collaborator. If you really want to do this, make sure that the required check you have configured is tied to a specific app, as otherwise any app may fake the check:

Block force pushes: This option is not security relevant (even if disabled, one cannot perform a force push that conflicts with other enabled rules).

Require code scanning results: This option is not directly security relevant.

Am I Safe?

So, you’ve set up all the recommended protections for my main branch. If done right, your main branch is indeed safe from malicious code (or at least from it being pushed there by a single nefarious actor). But bringing GitHub Actions back into the mix, you probably want to use an action to deploy the code from your newly protected branch. You start writing a workflow:

name: Deploy to production

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

# do production things

<...>

If we ignore for a second how this workflow is doing anything sensitive, while still being viewable to any reader (we’ll get to that in a moment when we talk about secrets), this workflow still is not safe against malicious collaborators. This is due to a very fundamental aspect of workflow security:

A corollary of this, that deserves to be spelled out separately is that:

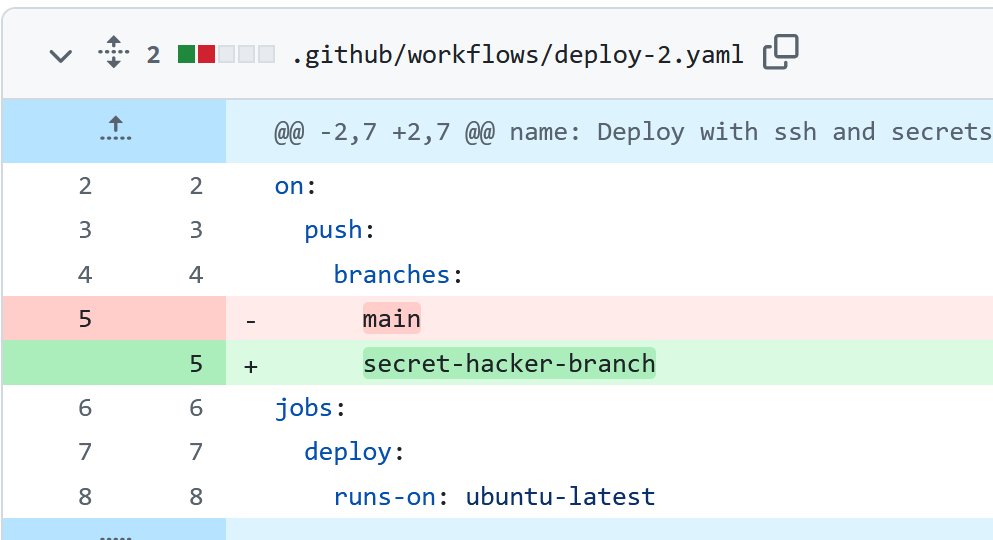

Take the above workflow as an example. I was feeling all clever and set up the triggers so that the workflow only runs on the main branch. But the malicious collaborator can simply edit the workflow file itself to make it trigger on pushes to their own branch:

The solution to this problem will be environments and/or using OIDC to integrate with cloud providers, but first, we need to look at how a workflow that is readable by everyone can perform sensitive operations safely.

GitHub Action Secrets

Admins of a repository can create secrets, which then can be used by workflows by using the secrets context. For example:

name: Deploy to production

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: SSH to a server and do something

env:

USERNAME: ${{ secrets.SSH_USERNAME }}

SERVER: ${{ secrets.SSH_SERVER }}

PRIVATE_KEY: ${{ secrets.SSH_PRIVATE_KEY }}

run: |

echo "$PRIVATE_KEY" > /tmp/id_rsa

chmod 600 /tmp/id_rsa

ssh -i /tmp/id_rsa "$USERNAME@$SERVER" 'echo "<run some command>"'

# <...>

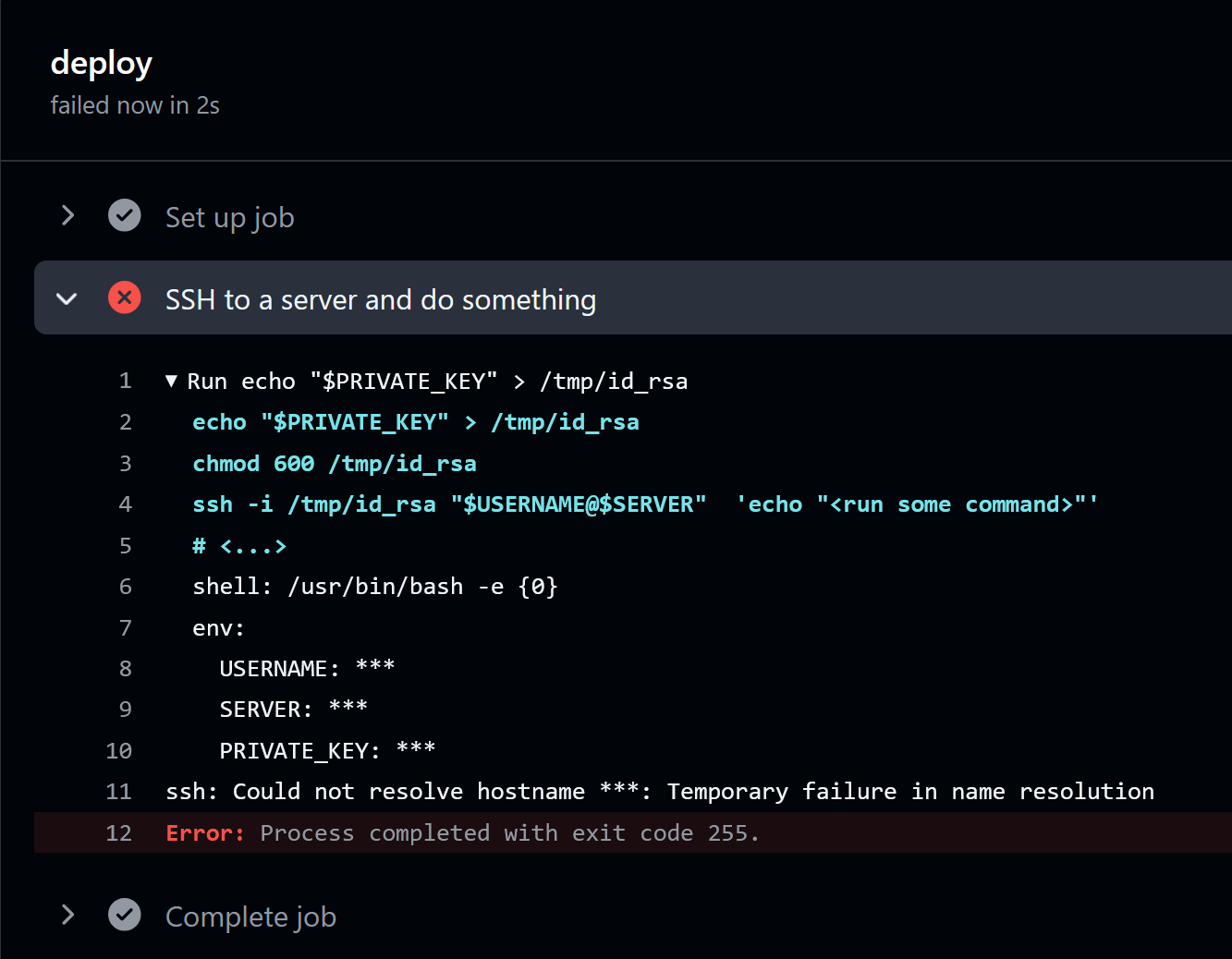

Even if someone with read permissions can read both the workflow file and any run logs, they will not be able to get the secret private key. In fact, even if you mess up or otherwise do something that would cause a secret to be printed to the run log, GitHub tries hard to save you by censoring any secrets, as in the below failed run:

What about collaborators? They can’t access the admin panel where secrets are managed, so maybe they also can’t read the secrets? At this point you should already know the answer:

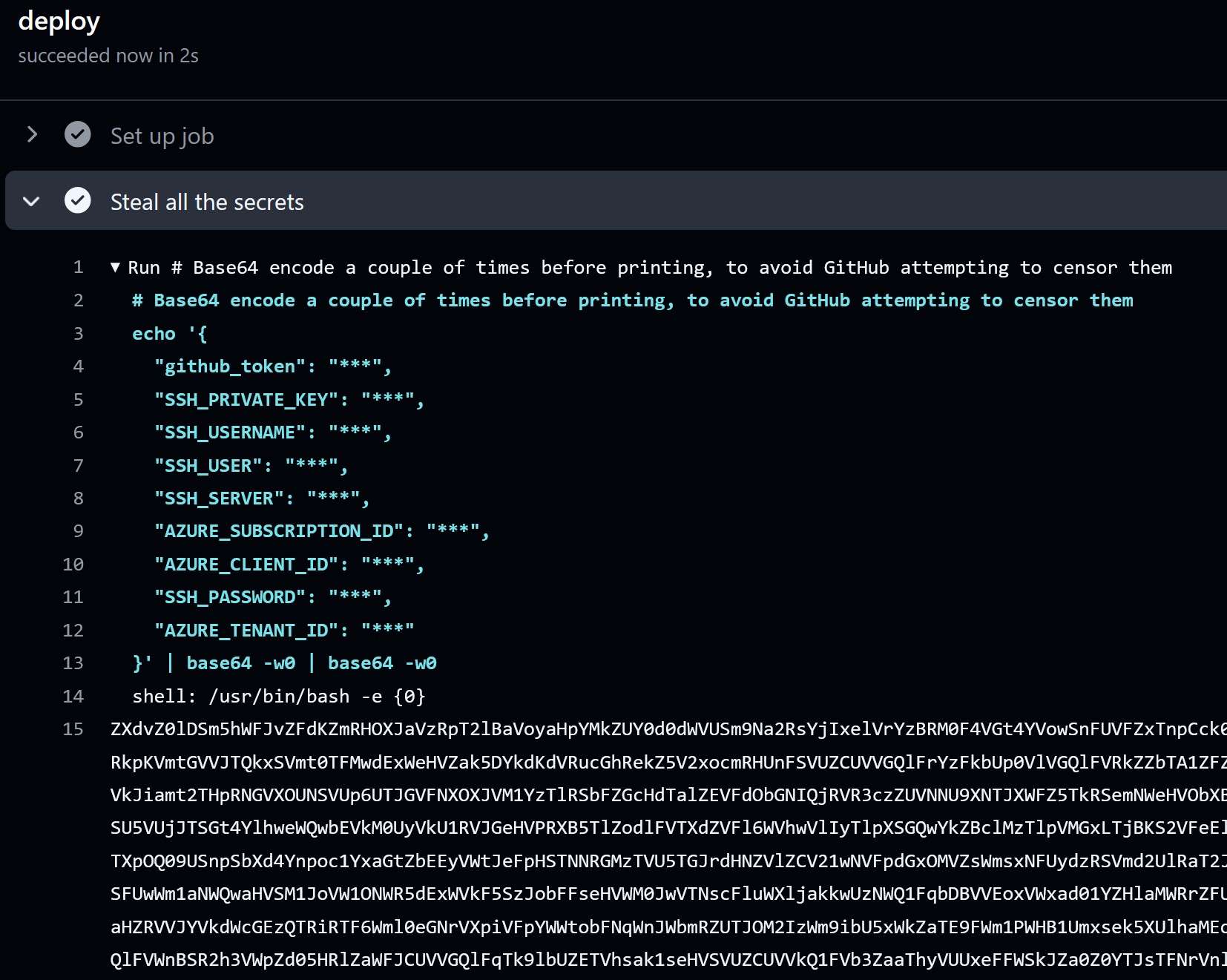

A collaborator can freely modify workflow files on unprotected branches, and there are no restrictions on which branches can access the repository secrets, so a collaborator can simply modify an existing workflow to print all secrets. A hacker simply modifies a workflow on the HACKER branch as below. Here the toJson function, which can be used in GitHub expressions, is very useful for reading out the whole secrets context without knowing the name of all keys:

name: Deploy to production

on:

push:

branches:

- HACKER

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Steal all the secrets

run: |

# Base64 encode a couple of times before printing, to avoid GitHub attempting to censor them

echo '${{ toJson(secrets) }}' | base64 -w0 | base64 -w0

Pushing the workflow change to the HACKER branch will trigger it to run:

To view the secrets, simply double base64 decode the printed string:

$ echo "ZXdv...Zz09" | base64 -d | base64 -d

{

"github_token": "<REDACTED>"

"SSH_PRIVATE_KEY": "-----BEGIN OPENSSH PRIVATE KEY-----\n<REDACTED>"

"SSH_USERNAME": "sofia",

"SSH_USER": "my-user",

"SSH_SERVER": "this-wont-resolve",

<REDACTED>

NB! If you are doing a pentest or a red team exercise, don’t just print all secrets like this, as they will be readable in the run logs to anyone that passes by. Instead, encrypt them first, for example using a public key. The default GitHub runners have openssl.

It is also possible to define secrets at the organization level, and then make them accessible to selected repositories. The exact same exfiltration will work for them as for the repository level secrets.

Environments

Luckily for us, there is a way to define secrets that cannot (necessarily) be read by all contributors, namely by defining secrets at the environment level.

Admins can create environments in a repository. Let’s say we’ve created prod and dev environments. The following workflow will run in the prod environment, as specified by the environment keyword:

name: Deploy to production

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

environment: prod

steps:

- name: SSH to a server and do something

env:

USERNAME: ${{ secrets.SSH_USERNAME }}

SERVER: ${{ secrets.SSH_SERVER }}

PRIVATE_KEY: ${{ secrets.SSH_PRIVATE_KEY }}

run: |

echo "$PRIVATE_KEY" > /tmp/id_rsa

chmod 600 /tmp/id_rsa

ssh -i /tmp/id_rsa "$USERNAME@$SERVER" 'echo "<run some command>"'

# <...>

If there are secrets named SSH_USERNAME, SSH_SERVER and SSH_PRIVATE_KEY defined in the prod environment, then these take precedence over any repository-level or organization-level secrets. That way we can have separate prod and dev secrets, where the former could be more sensitive than the latter.

It’s all very well to name something prod, but environments have no inherent protections. So again, our malicious collaborator can simply modify an existing workflow to deploy to the desired environment and exfiltrate all secrets there (running a workflow in a specific environment is referred to as deploying to that environment).

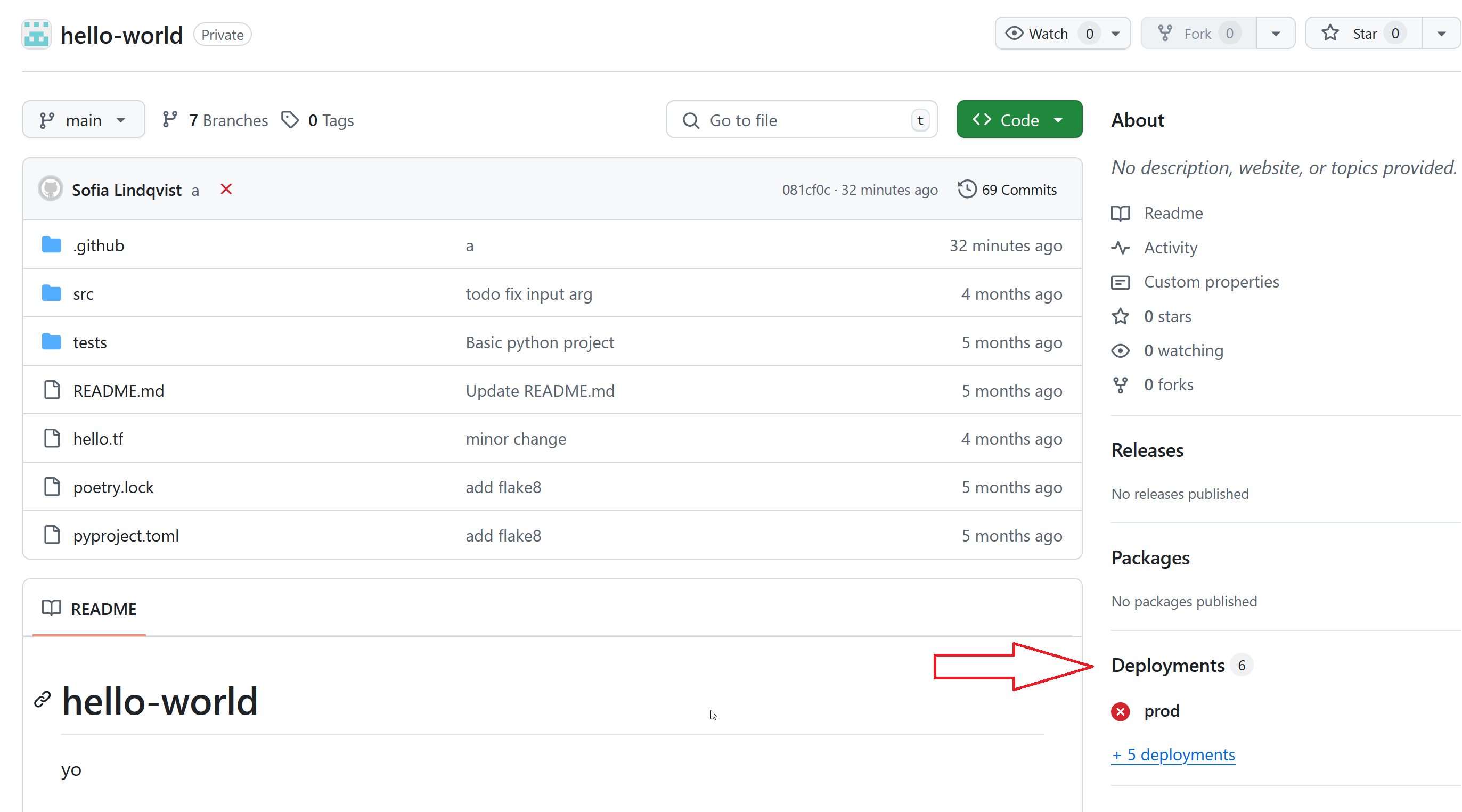

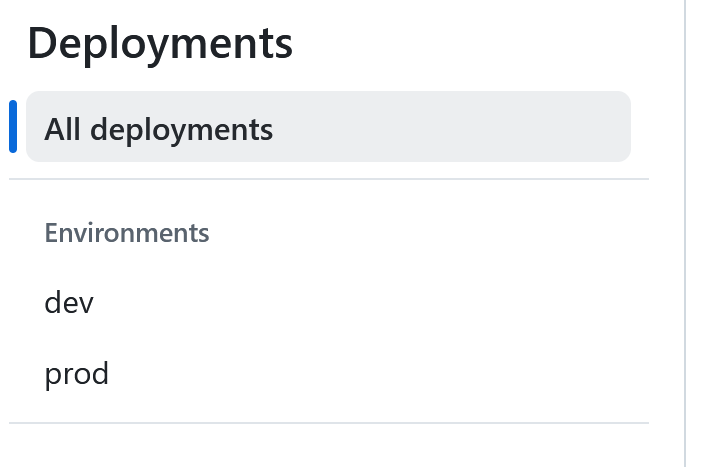

Note that anyone with read permissions or higher can get the list of environments by clicking “Deployments” in the bottom right of the main repository view:

Protecting an Environment

In the environment settings, there are various ways to limit who and what can deploy. Note that the available options may be more limited than what you see here, unless you’re in a public repository or your organization has an enterprise plan.

The available protections are as follows.

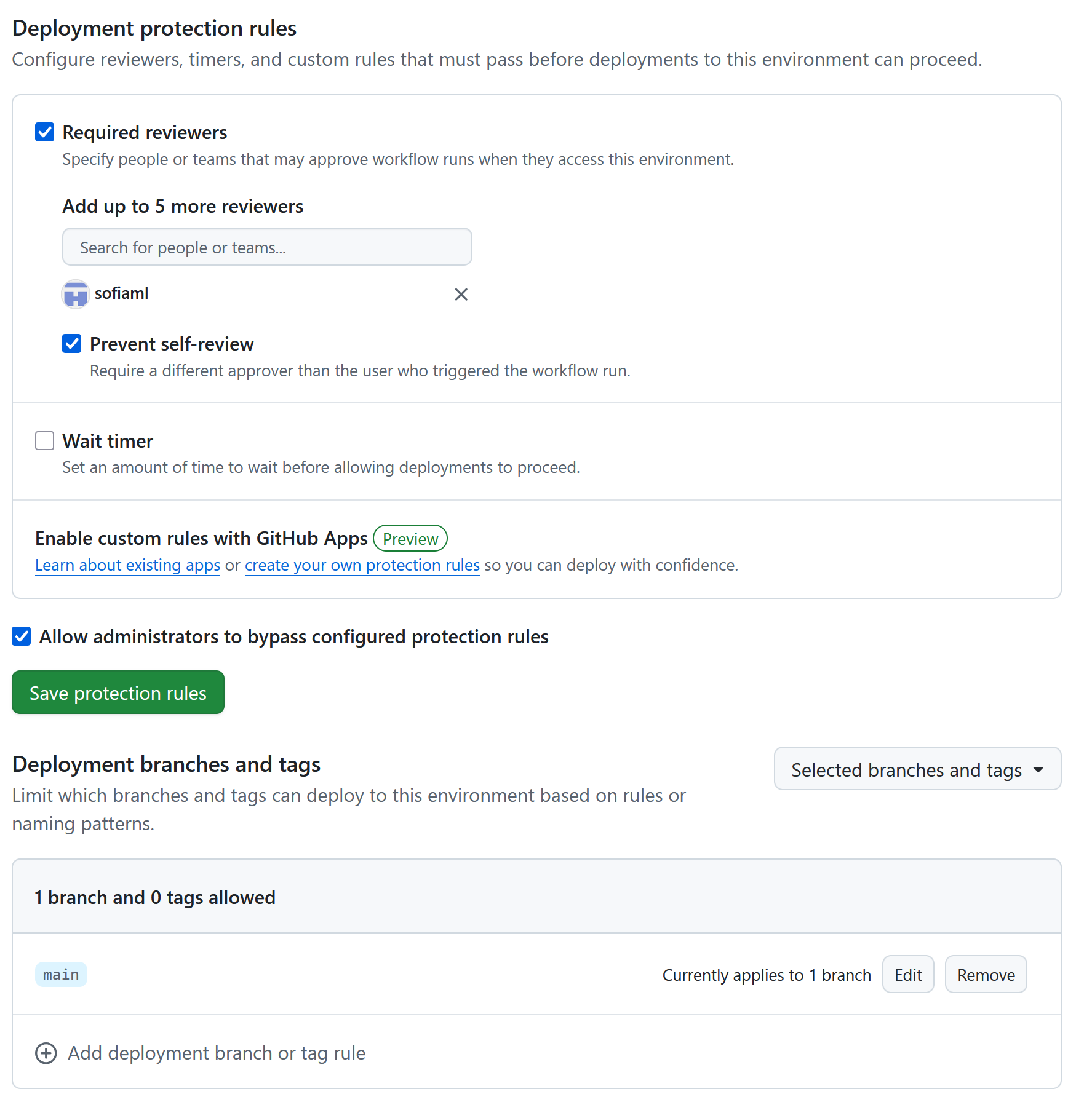

Required reviewers: When this option is specified, one specifies a list of people and/or teams that may approve a workflow. When a workflow run is triggered that would deploy to that environment, then one of the allowed people must accept the run in order for it to proceed. If this is being used as a security option, the “Prevent self-review” option must be enabled, as otherwise a malicious collaborator can trigger a deploy and then immediately approve it themselves.

Deployment branches and tags: This is perhaps the most important option. Here one can configure from which branches and tags it is possible to deploy to this environment. You can either specify which branches and tags are allowed by choosing “selected branches and tags”, or choose “Protected branches only”. NB! The latter only applies to branches with classic branch protections. When setting up branch protections the GitHub UI steers you towards setting up a ruleset instead, so it may very well be that this option does not do what you expect.

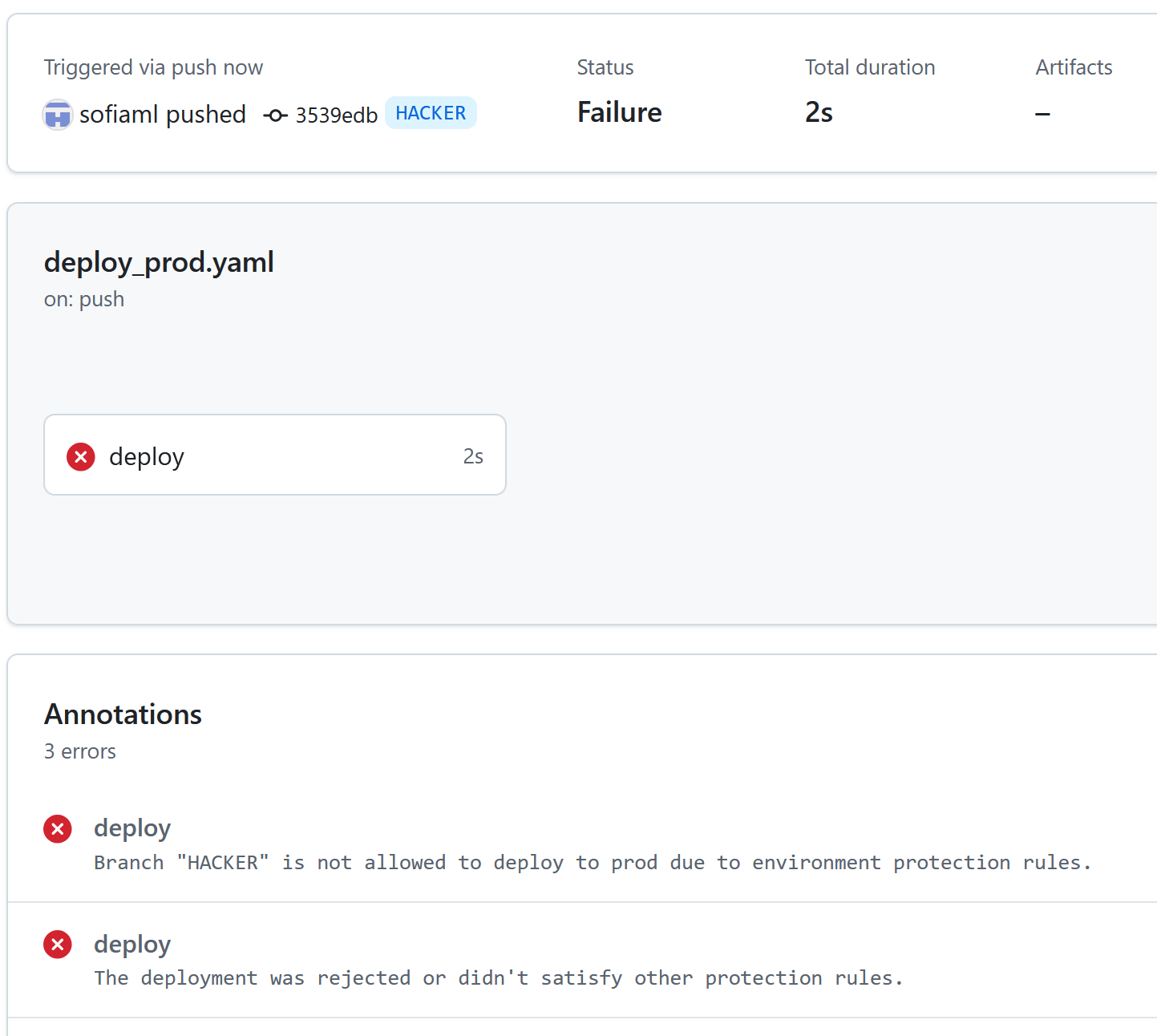

If a malicious contributor tries to modify a workflow to run in a properly protected environment it will fail:

Tags

In GitHub, a tag is tied to a release. Maybe you have a latest tag that always points to the latest release, or a stable tag that always should point to a stable release. These may also need to be protected. For example, you don’t want a malicious actor tagging their malware with the latest tag, causing other parts of your organization, or maybe unsuspecting third parties, to use the malicious code instead of the intended release.

By default, anyone with contributor access can create a new tag or modify an existing one. A tag has to point to a release, which also can be created by anyone with contributor access. So the default case is that anyone with contributor access has complete control over your tags and what they point to.

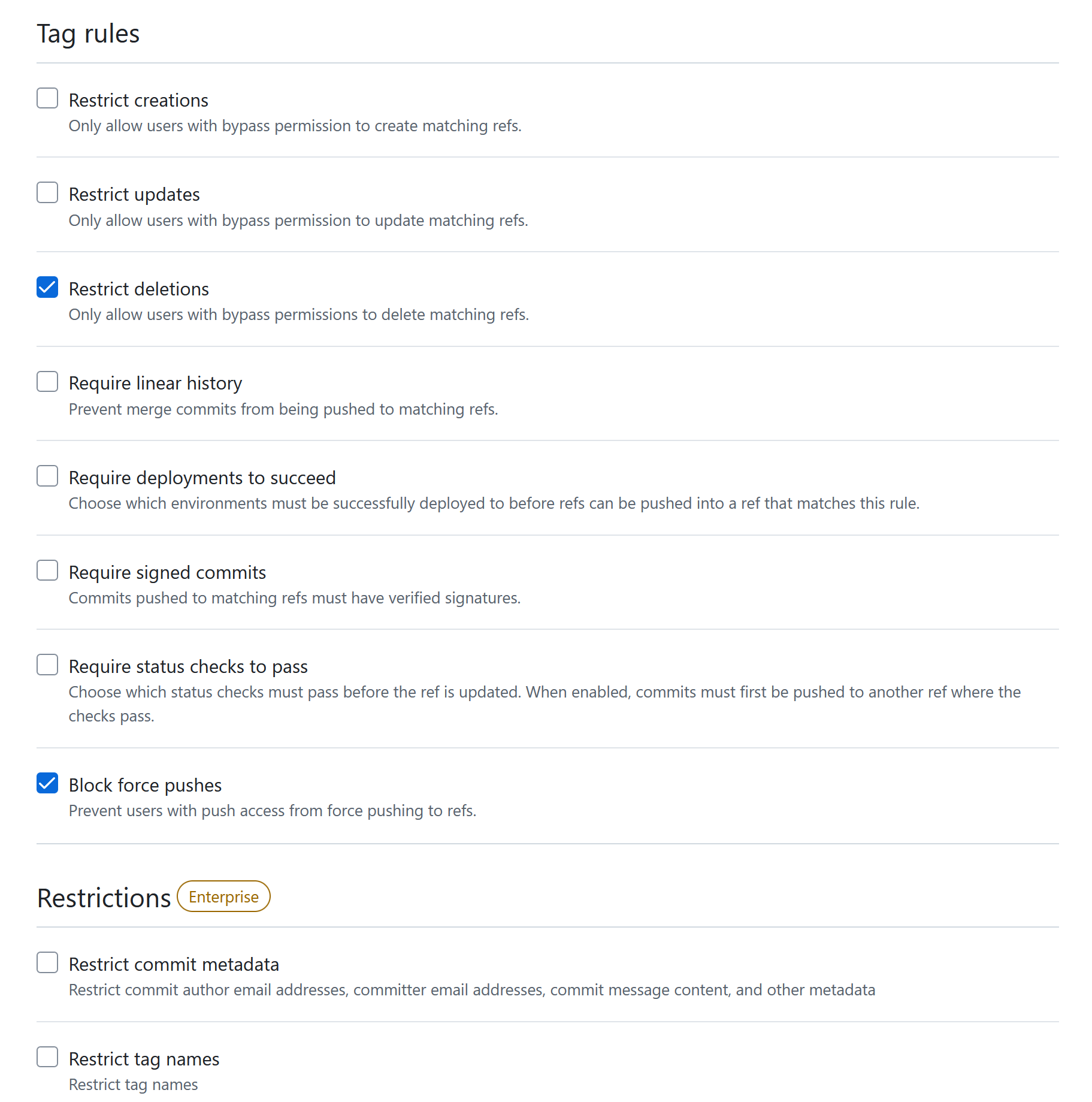

There are some tag protections that can be added, similarly to how one adds branch protections. These are the available rules:

Restrict creations/updates/deletions: All of these restrict the specified action to only users with bypass permissions. Bypass permissions are added separately. This obviously has no effect unless the set of users with bypass permissions is more restrictive than the set of users with contributor access to begin with.

Require linear history: Similar to the corresponding branch protection.

Require deployments to succeed: This works by specifying a list of deployments that must succeed on the underlying commit before a release can be tagged with the specified tag(s). In other words, you specify a list of environments, and then for each of those, a workflow must have successfully deployed to (= run in) that environment off the underlying commit of the target release. This is the only protection rule that actually allows us to satisfy the four-eyes principle for tags, and the way to achieve it is as follows:

- For your protected tag (let’s say

latest), require successful deployment to at least one environment (let’s sayprod) - For the environment (

prod), add the “Deployment branches and tags” protection, and require that it can only be deployed to from a specific branch (let’s saymain) - For the specified branch (

main), add branch protections requiring a pull request before merging, with all the options outlined previously enabled: at least one approval required, “Dismiss stale pull request approvals when commits are pushed” and “Require approval of the most recent reviewable push”.

Require signed commits: Similar to the corresponding branch protection.

Require status checks to pass: Similar to the corresponding branch protection.

Block force pushes: Similar to the corresponding branch protection.

Summary: GitHub-only Setup

In summary, if you want to do sensitive things using GitHub actions, and you don’t want a single contributor to be able to compromise your production environment, you should:

- Use the

envkeyword to setup environment variables instead of using contexts directly in workflow steps, and then access the environment variables using regular Linux syntax like$MY_VARIABLE. - Protect your default or production branch with the following options:

- Require pull requests before merging.

- Require one or more approvals on pull requests.

- Enable the option “Dismiss stale pull request approvals when commits are pushed”.

- Enable the option “Require approval of the most recent reviewable push”.

- Store any sensitive values in environment secrets.

- Protect any environments that have production secrets with the following options:

- Configure which branch(es) may deploy to the environment. Ensure that all allowed branches are protected as described above.

- As an optional extra layer of security, set up required reviews for deploying to the environment. In this case, make sure to enable “Prevent self-review”.

- Protect any sensitive tags with the following options:

- Require deployments to succeed.

- For the selected deployments, ensure that the environments are protected as described above.

Conclusion

The topic of security in GitHub Actions is obviously much larger than what I manage to cover in a couple of blog posts. At the end of the day, if you have a GitHub CI/CD setup and want to be as secure as possible, the best thing you can do is probably to perform a security test, either as part of a bigger penetration test or as a standalone exercise.

Head over to part 2 for details on integrating GitHub Actions with Azure.